NVIDIA has introduced Spectrum-XGS Ethernet, a new scale-across networking technology designed to connect distributed data centers into unified giga-scale AI super-factories.

CoreWeave will be among the first to adopt the system, marking a significant step in the evolution of AI infrastructure.

Expanding AI Beyond Traditional Boundaries

In an announcement at Hot Chips 2025, NVIDIA unveiled Spectrum-XGS Ethernet, a major advancement in data center connectivity.

The technology addresses a growing challenge: AI demand is surging, but many facilities are reaching the limits of power, space, and efficiency.

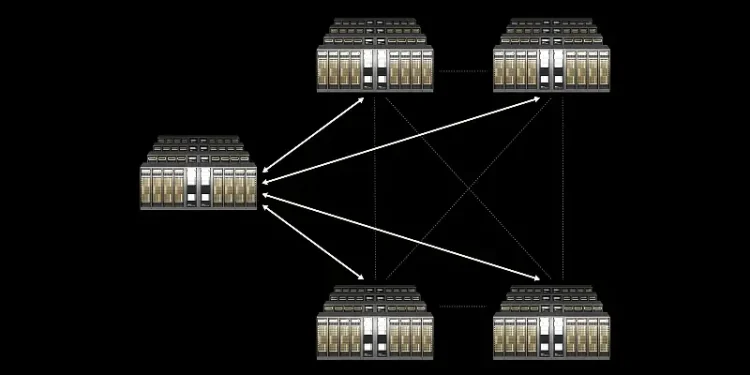

The solution lies in “scale-across” networking, a new model that allows geographically separate data centers to operate as one.

Unlike scale-up (adding more resources in a single location) or scale-out (expanding racks and clusters locally), scale-across unifies multiple sites into a single AI super-factory capable of giga-scale computing.

Why Spectrum-XGS Ethernet Matters

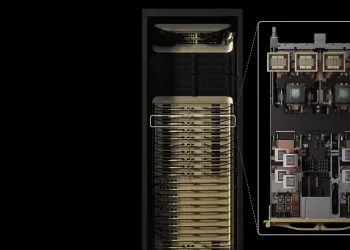

Spectrum-XGS Ethernet is integrated into NVIDIA’s Spectrum-X platform and introduces features such as adaptive congestion control, precision latency management, and end-to-end telemetry. These functions help maintain stable, predictable performance across long distances.

By enhancing multi-GPU and multi-node communication, the system nearly doubles throughput compared to standard Ethernet, making it especially relevant for AI workloads where reliability and speed are critical.

CoreWeave Leads Early Adoption

CoreWeave, a US-based high-performance computing provider, will be among the first to deploy Spectrum-XGS Ethernet.

The company plans to interconnect its data centers across the United States, creating a unified environment capable of delivering large-scale AI capabilities to innovators and businesses.

This deployment highlights how distributed connectivity can help cloud providers and research institutions expand without being limited by the constraints of a single facility.

Technical and Industry Impact

Spectrum-XGS Ethernet provides 1.6x greater bandwidth density than traditional Ethernet solutions, supporting large-scale, multi-tenant AI operations.

Its design ensures ultralow latency, scalable bandwidth, and reduced energy consumption—major factors as AI infrastructure expands globally.

Industry Significance at a Glance

| Feature | Benefit for AI Infrastructure |

|---|---|

| Scale-Across Networking | Connects multiple data centers into one system |

| Precision Latency Management | Delivers consistent performance across distances |

| Adaptive Congestion Control | Improves reliability in multi-node communication |

| 1.6x Bandwidth Density | Supports larger and more complex AI workloads |

| Integration with Spectrum-X | Seamless scaling for enterprises and cloud providers |

Global Context and Historical Perspective

The introduction of scale-across networking mirrors earlier transitions in computing history, such as the shift from localized servers to globally distributed cloud systems. Just as cloud technology enabled rapid innovation in the 2010s, Spectrum-XGS Ethernet aims to provide the backbone for the AI industrial era.

Researchers, businesses, and governments can benefit from this development. By connecting facilities across regions and even continents, global AI collaboration becomes more feasible, accelerating projects in medicine, finance, climate modeling, and logistics.

Social and Economic Implications

The ability to unify distant facilities into giga-scale AI factories carries wide-reaching consequences. For the United States, this could mean stronger competitiveness in the AI race, new opportunities for startups, and growth in jobs tied to high-performance computing.

For society more broadly, scale-across infrastructure may lower costs while increasing sustainability. Improved bandwidth density and energy efficiency help reduce the environmental impact of expanding AI systems.

Key Takeaways

-

Spectrum-XGS Ethernet introduces scale-across networking, complementing scale-up and scale-out methods.

-

CoreWeave is among the first adopters, using it to interconnect US-based data centers.

-

The system nearly doubles performance for AI communication while improving efficiency and scalability.

Final Thoughts

NVIDIA’s Spectrum-XGS Ethernet represents a pivotal shift in how AI data centers are designed and connected. By enabling scale-across networking, it opens the door for distributed facilities to operate as unified giga-scale AI factories.

The move reflects a broader industry trend toward global collaboration, sustainability, and performance-driven innovation.

Sources: NVIDIA.

Prepared by Ivan Alexander Golden, Founder of THX News™, an independent news organization delivering timely insights from global official sources. Combines AI-analyzed research with human-edited accuracy and context.