NVIDIA has launched Rubin CPX, a groundbreaking GPU engineered to handle massive-context inference for million-token coding and generative video applications.

NVIDIA unveiled the Rubin CPX GPU at the AI Infra Summit, marking a new chapter in high-performance computing. The platform, designed for massive-context processing, allows AI systems to process far longer sequences than existing architectures. This innovation has the potential to transform both software development and creative AI applications.

Introducing a New Class of GPU

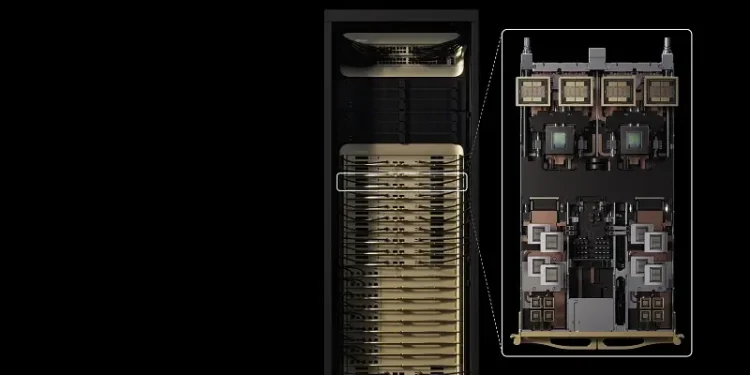

The Rubin CPX GPU stands apart from previous NVIDIA hardware by focusing on massive-context inference. This enables advanced AI models to reason across millions of tokens simultaneously. Built on the NVIDIA Rubin architecture, CPX introduces a monolithic die design with NVFP4 precision, 128GB of GDDR7 memory, and up to 30 petaflops of compute performance.

Integrated Performance with Vera Rubin Platform

The GPU operates at the heart of the Vera Rubin NVL144 CPX platform, which combines 8 exaflops of AI compute with 100TB of fast memory. This system achieves 7.5x higher performance compared with the NVIDIA GB300 NVL72 and delivers 1.7 petabytes per second of memory bandwidth.

Performance and Scale

Rubin CPX delivers a 3x improvement in attention capabilities, allowing AI models to process longer sequences without speed reductions. With integrated video encoders and decoders, the GPU also addresses growing demand in long-format video generation and advanced search applications.

Applications Across Industries

Several innovators are already exploring Rubin CPX for transformative use cases:

Cursor: Accelerating developer productivity with intelligent coding tools inside advanced code editors.

Runway: Supporting generative video workflows for cinematic content and visual effects.

Magic: Powering AI agents capable of reasoning over entire codebases and long interaction histories.

Technical Advancements

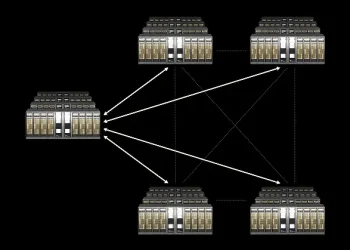

The Rubin CPX GPU incorporates specialized design for efficiency and scalability. NVIDIA has paired it with both Quantum-X800 InfiniBand and Spectrum-X Ethernet platforms, enabling organizations to scale deployments seamlessly.

System Features and Benefits

- Up to 30 petaflops of NVFP4 compute power.

- 128GB of efficient GDDR7 memory for demanding workloads.

AI Ecosystem and Support

Rubin CPX is fully integrated with the NVIDIA AI stack, including CUDA-X libraries, Nemotron multimodal models, and Dynamo for scaling inference. Enterprises can deploy production-ready models with NVIDIA AI Enterprise, leveraging optimized frameworks, libraries, and NIM microservices.

Market and Monetization Potential

The Vera Rubin NVL144 CPX platform offers unprecedented commercial opportunities. According to NVIDIA, companies can generate up to $5 billion in token revenue for every $100 million invested in Rubin CPX infrastructure.

Rubin CPX vs GB300 NVL72

| Specification | Rubin CPX (NVL144) | GB300 NVL72 |

|---|---|---|

| AI Performance | 8 Exaflops | 1.07 Exaflops |

| Memory Capacity | 100TB | 12TB |

| Memory Bandwidth | 1.7 PB/s | 0.23 PB/s |

| Attention Performance | 3x faster | Baseline |

Availability

The Rubin CPX GPU and the Vera Rubin NVL144 CPX platform are scheduled for release at the end of 2026. Their arrival is expected to influence how companies approach large-scale coding tasks and generative video, while also raising questions about cost, accessibility, and integration timelines.

As the technology matures, industry analysts will be watching closely to see whether Rubin CPX delivers on its technical promises and how quickly developers adopt it across sectors. For now, NVIDIA’s announcement signals an ambitious step forward in the competition to provide hardware capable of supporting the next wave of AI workloads.

Looking Ahead

The launch of Rubin CPX sets the stage for an evolving debate in AI infrastructure. Observers will track how quickly enterprises move to integrate the platform, how startups balance opportunity with investment challenges, and whether rival chipmakers respond with competing solutions. The answers to these questions will shape the broader trajectory of long-context AI applications in the coming years.

Sources: NVIDIA.

Prepared by Ivan Alexander Golden, Founder of THX News™, an independent news organization delivering timely insights from global official sources. Combines AI-analyzed research with human-edited accuracy and context.